PowerShell is a powerful tool to master. Here’s our step-by-step guide to getting familiar with Windows’ über language.

If you’ve wrestled with Windows 10, you’ve undoubtedly heard of PowerShell. If you’ve tried to do something fancy with Win7/8.1 recently, PowerShell’s probably come up, too. After years of relying on the Windows command line and tossed-together batch files, it’s time to set your sights on something more powerful, more adaptive — better.

PowerShell is an enormous addition to the Windows toolbox, and it can provoke a bit of fear given that enormity. Is it a scripting language, a command shell, a floor wax? Do you have to link a cmdlet with an instantiated .Net class to run with providers? And why do all the support docs talk about administrators — do I have to be a professional Windows admin to make use of it?

Relax. PowerShell is powerful, but it needn’t be intimidating.

The following guide is aimed at those who have run a Windows command or two or jimmied a batch file. Consider it a step-by-step transformation from PowerShell curious to PowerShell capable.

Step 1: Crank it up

The first thing you’ll need is PowerShell itself. If you’re using Windows 10, you already have PowerShell 5 — the latest version — installed. (Win10 Anniversary Update has 5.1, but you won’t know the difference with the Fall Update’s 5.0.) Windows 8 and 8.1 ship with PowerShell 4, which is good enough for getting your feet wet. Installing PowerShell on Windows 7 isn’t difficult, but it takes extra care — and you need to install .Net Framework separately. JuanPablo Jofre details how to install WMF 5.0 (Windows Management Framework), which includes PowerShell, in addition to tools you won’t likely use when starting out, on MSDN.

PowerShell offers two interfaces. Advanced users will go for the full-blown GUI, known as the Integrated Scripting Environment (ISE). Beginners, though, are best served by the PowerShell Console, a simple text interface reminiscent of the Windows command line, or even DOS 3.2.

To start PowerShell as an Administrator from Windows 10, click Start and scroll down the list of apps to Windows PowerShell. Click on that line, right-click Windows PowerShell, and choose Run as Administrator. In Windows 8.1, look for Windows PowerShell in the Windows System folder. In Win7, it’s in the Accessories folder. You can run PowerShell as a “normal” user by following the same sequence but with a left click.

In any version of Windows, you can use Windows search to look for PowerShell. In Windows 8.1 and Windows 10, you can put it on your Ctrl-X “Power menu” (right-click a blank spot on the taskbar and choose Properties; on the Navigation tab, check the box to Replace Command Prompt). Once you have it open, it’s a good idea to pin PowerShell to your taskbar. Yes, you’re going to like it that much.

Step 2: Type old-fashioned Windows commands

You’d be amazed how much Windows command-line syntax works as expected in PowerShell.

For example, cd changes directories (aka folders), and dir still lists all the files and folders included in the current folder.

Depending on how you start the PowerShell console, you may start at c:\Windows\system32 or at c:\Users\<username>. In the screenshot example, I use cd .. (note the space) to move up one level at a time, then run dir to list all files and subfolders in the C:\ directory.

Step 3: Install the help files

Commands like cd and dir aren’t native PowerShell commands. They’re aliases — substitutes for real PowerShell commands. Aliases can be handy for those of us with finger memory that’s hard to overcome. But they don’t even begin to touch the most important parts of PowerShell.

To start getting a feel for PowerShell itself, type help followed by a command you know. For example, in the screenshot, I type help dir.

PowerShell help tells me that dir is an alias for the PowerShell command Get-ChildItem. Sure enough, if you type get-childitem at the PS C:\> prompt, you see exactly what you saw with the dir command.

As noted at the bottom of the screenshot, help files for PowerShell aren’t installed automatically. To retrieve them (you do want to get them), log on to PowerShell in Administrator mode, then type update-help. Installing the help files will take several minutes, and you may be missing a few modules — Help for NetWNV and SecureBoot failed to install on my test machine. But when you’re done, the full help system will be at your beck and call.

From that point on, type get-help followed by the command (“cmdlet” in PowerShell speak, pronounced “command-let”) that concerns you and see all of the help for that item. For example, get-help get-childitem produces a summary of the get-childitem options. It also prompts you to type in variations on the theme. Thus, the following:

get-help get-childitem -examples

produces seven detailed examples of how to use get-childitem. The PowerShell command

get-help get-childitem -detailed

includes those seven examples, as well as a detailed explanation of every parameter available for the get-childitem cmdlet.

Step 4: Get help on the parameters

In the help dir screenshot, you might have noticed there are two listings under SYNTAX for get-childitem. The fact that there are two separate syntaxes for the cmdlet means there are two ways of running the cmdlet. How do you keep the syntaxes separate — and what do the parameters mean? The answer’s easy, if you know the trick.

To get all the details about parameters for the get-childitem cmdlet, or any other cmdlet, use the -full parameter, like this:

get-help get-childitem -full

That produces a line-by-line listing of what you can do with the cmdlet and what may (or may not!) happen. See the screenshot.

Sifting through the parameter details, it’s reasonably easy to see that get-childitem can be used to retrieve “child” items (such as the names of subfolders or filenames) in a location that you specify, with or without specific character matches. For example:

get-childItem “*.txt” -recurse

retrieves a list of all of the “*.txt” files in the current folder and all subfolders (due to the -recurse parameter). Whereas the following:

get-childitem “HKLM:\Software”

returns a list of all of the high-level registry keys in HKEY_LOCAL_MACHINE\Software.

If you’ve ever tried to get inside the registry using a Windows command line or a batch file, I’m sure you can see how powerful this kind of access must be.

Step 5: Nail down the names

There’s a reason why the cmdlets we’ve seen so far look the same: get-childitem, update-help, and get-help all follow the same verb-noun convention. Mercifully, all of PowerShell’s cmdlets use this convention, with a verb preceding a (singular) noun. Those of you who spent weeks struggling over inconsistently named VB and VBA commands can breathe a sigh of relief.

To see where we’re going, take a look at some of the most common cmdlets (thanks to Ed Wilson’s Hey, Scripting Guy! blog). Start with the cmdlets that reach into your system and pull out useful information, like the following:

set-location: Sets the current working location to a specified location

get-content: Gets the contents of a file

get-item: Gets files and folders

copy-item: Copies an item from one location to another

remove-item: Deletes files and folders

get-process: Gets the processes that are running on a local or remote computer

get-service: Gets the services running on a local or remote computer

invoke-webrequest: Gets content from a web page on the internet

To see how a particular cmdlet works, use get-help, as in

get-help copy-item -full

Based on its help description, you can readily figure out what the cmdlet wants. For example, if you want to copy all your files and folders from Documents to c:\temp, you would use:

copy-item c:\users\[username] \documents\* c:\temp

As you type in that command, you’ll see a few nice touches built into the PowerShell environment. For example, if you type copy-i and press the Tab key, PowerShell fills in Copy-Item and a space.

If you mistype a cmdlet and PowerShell can’t figure it out, you get a very thorough description of what went wrong.

Try this cmdlet. (It may try to get you to install a program to read the “about” box. If so, ignore it.)

invoke-webrequest netcal.com

You get a succinct list of the web page’s content declarations, headers, images, links, and more. See how that works? Notice in the get-help listing for invoke-webrequest that the invoke-webrequest cmdlet “returns collections of forms, links, images, and other significant HTML elements” — exactly what you should see on your screen.

Some cmdlets help you control or grok PowerShell itself:

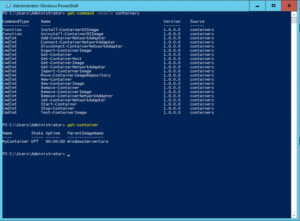

get-command: Lists all available cmdlets (it’s a long list!)

get-verb: Lists all available verbs (the left halves of cmdlets)

clear-host: Clears the display in the host program

Various parameters (remember, get-help) let you whittle down the commands and narrow in on options that may be of use to you. For example, to see a list of all the cmdlets that work with Windows services, try this:

get-command *-service

It lists all the verbs that are available with service as the noun. Here’s the result:

Get-Service

New-Service

Restart-Service

Resume-Service

Set-Service

Start-Service

Stop-Service

Suspend-Service

You can combine these cmdlets with other cmdlets to dig down into almost any part of PowerShell. That’s where pipes come into the picture.

Step 6: Bring in the pipes

If you’ve ever used the Windows command line or slogged through a batch file, you know about redirection and pipes. In simple terms, both redirection (the > character) and pipes (the | character) take the output from an action and stick it someplace else. You can, for example, redirect the output of a dir command to a text file, or “pipe” the result of a ping command into a find, to filter out interesting results, like so:

dir > temp.txt

ping askwoody.com | find “packets” > temp2.txt

In the second command above, the find command looks for the string packets in the piped output of an askwoody.com ping and sticks all the lines that match in a file called temp2.txt.

Perhaps surprisingly, the first of those commands works fine in PowerShell. To run the second command, you want something like this:

ping askwoody.com | select-string packets | out-file temp2.txt

Using redirection and pipes greatly expands the Windows command line’s capabilities: Instead of scrolling endlessly down a screen looking for a text string, for example, you can put together a piped Windows command that does the vetting for you.

PowerShell has a piping capability, but it isn’t restricted to text. Instead, PowerShell lets you pass an entire object from one cmdlet to the next, where an “object” is a combination of data (called properties) and the actions (methods) that can be used on the data.

The hard part, however, lies in aligning the objects. The kind of object delivered by one cmdlet has to match up with the kinds of objects accepted by the receiving cmdlet. Text is a very simple kind of object, so if you’re working with text, lining up items is easy. Other objects aren’t so rudimentary.

How to figure it out? Welcome to the get-member cmdlet. If you want to know what type of object a cmdlet produces, pipe it through get-member. For example, if you’re trying to figure out the processes running on your computer, and you’ve narrowed down the options to the get-process cmdlet, here’s how you find out what the get-process cmdlet produces:

get-process | get-member

Running that command produces a long list of properties and methods for get-process, but at the very beginning of the list you can see the type of object that get-process creates:

TypeName: System.Diagnostics.Process

The below screenshot also tells you that get-process has properties called Handles, Name, NPM, PM, SI, VM, and WS.

If you want to manipulate the output of get-process so that you can work with it (as opposed to having it display a long list of active processes on the monitor), you need to find another cmdlet that will work with System.Diagnostics.Process as input. To find a willing cmdlet, you simply use … wait for it … PowerShell:

get-command -Parametertype System.Diagnostics.Process

That produces a list of all of the cmdlets that can handle System.Diagnostics.Process.

Some cmdlets are notorious for taking nearly any kind of input. Chief among them: where-object. Perhaps confusingly, where-object loops through each item sent down the pipeline, one by one, and applies whatever selection criteria you request. There’s a special marker called $_. that lets you step through each item in the pipe, one at a time.

Say you wanted to come up with a list of all of the processes running on your machine that are called “svchost” — in PowerShell speak, you want to match on a Name property of svchost. Try this PowerShell command:

get-process | where-object {$_.Name -eq “svchost”}

The where-object cmdlet looks at each System.Diagnostics.Process item, compares the .Name of that item to “svchost”; if the item matches, it gets spit out the end of the pipe and typed on your monitor.