Application Whitelisting Using Software Restriction Policies

Software Restriction Policies (SRP) allows administrators to manage what applications are permitted to run on Microsoft Windows. SRP is a Windows feature that can be configured as a local computer policy or as a domain policy through Group Policy with Windows Server 2003 domains and above. The use of SRP as a white-listing technique will increase the security feature of the domain by preventing malicious programs from running since the administrators can manage which software or applications are allowed to run on client PCs.

Blacklisting is a reactive technique that does not extend well to the increasing number and variety of malware. There have been many attacks that cannot be blocked by the blacklisting techniques since it uses undiscovered vulnerabilities known as zero-day vulnerabilities.

On the other hand, Application white-listing is a practical technique where only a limited number of programs are allowed to run and the rest of the programs are blocked by default. It makes it hard for attackers to get in to the network since it needs to exploit one of the allowed programs on the user’s computer or get around the white-listing mechanism to make a successful attack. This approach should not be seen as replacement standard security software such as anti virus or firewalls – it is best used in conjunction with these.

Since Microsoft Windows operating systems have SRP functionality built in, administrators can readily configure an application white-listing solution that only allows specific executable files to be run. Service Restriction Policies can also restrict which application libraries are permitted to be used by executable’s.

There are certain recommended SRP settings by NSA Information Assurance Directorate’s (IAD) Systems and Network Analysis Center (SNAC). It is advised to test any configuration changes on a test network or on a small set of test computers to make sure that the settings are correct before implementing the change on the whole domain.

There is known issues on certain Windows versions to consider: for example minor usability issue such as when double-clicking a document, it may not open the associated document viewer application, another is the software update method that allows users to manually apply patches may not function well once SRP is enforced. We may see these issues addressed with a hotfix provided by Microsoft. Automatic updates are not affected by SRP white-listing and will still function correctly. SRP settings should be tested thoroughly due to issues like this to prevent causing a widespread problem in your production environment.

The use of path-based SRP rules are recommended since it has shown unnoticeable performance impact on host after a good deal of testing. Other rules may provide greater security benefits than path-based rules but it has an increased impact on host performance. Other rules like file hash rules are more difficult to manage and needs constant updates each time any files are installed or updated, another is the certificate rules which is somehow limited since not all the applications’ files are digitally signed by their software publishers.

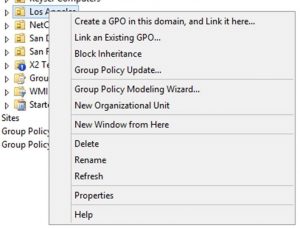

There are certain steps to follow in implementing SRP in Active Directory domain which can be done through the steps below:

1. Review the domain to find out which applications are operating on domain computers.

2. Configure SRP to work in white-listing approach.

3. Choose which applications must be permitted to run and make extra SRP rules as required.

4. Test the SRP rules and form additional rules as needed.

5. Install SRP to sequentially larger Organizational Units until SRP is functional to the entire network.

6. Observe SRP continuously and adjust the rules when needed.

SRP configuration as described above can drastically increase security stance of a domain while continuously letting users to run the applications they need to remain productive for their work.