5 Ways Your Business Benefits from Automation

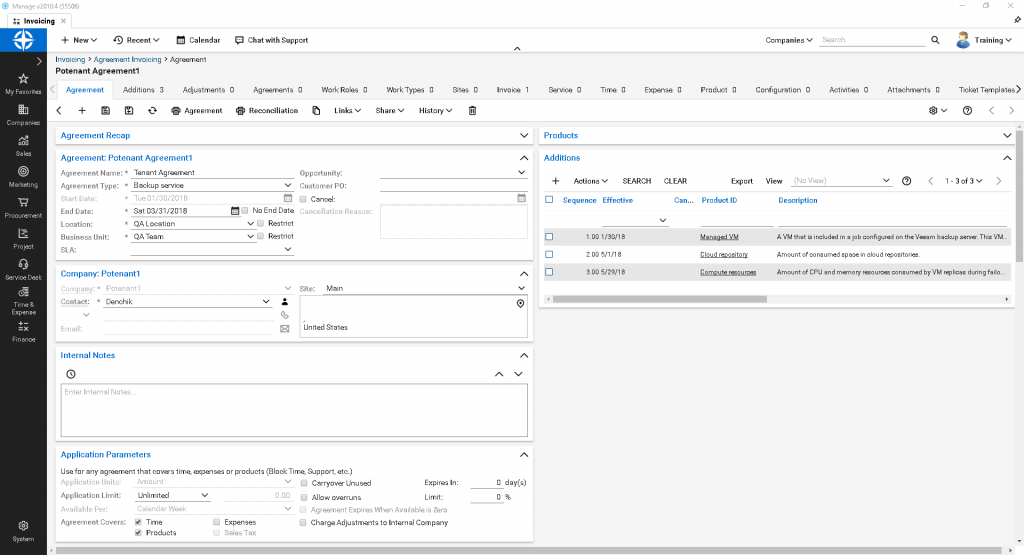

1: Improved Organization

Automation tools distribute information seamlessly. For instance, when you automatically create a quote for a new project and can invoice it from the same system, all of the information regarding the project is in the same place. You don’t need to go looking for it across multiple systems.

Automation ensures that the information is automatically sent where you need it, keeping your information current, and preventing your team from spending a lot of time looking for it.

2: Reduced Time Spent on Redundant Tasks

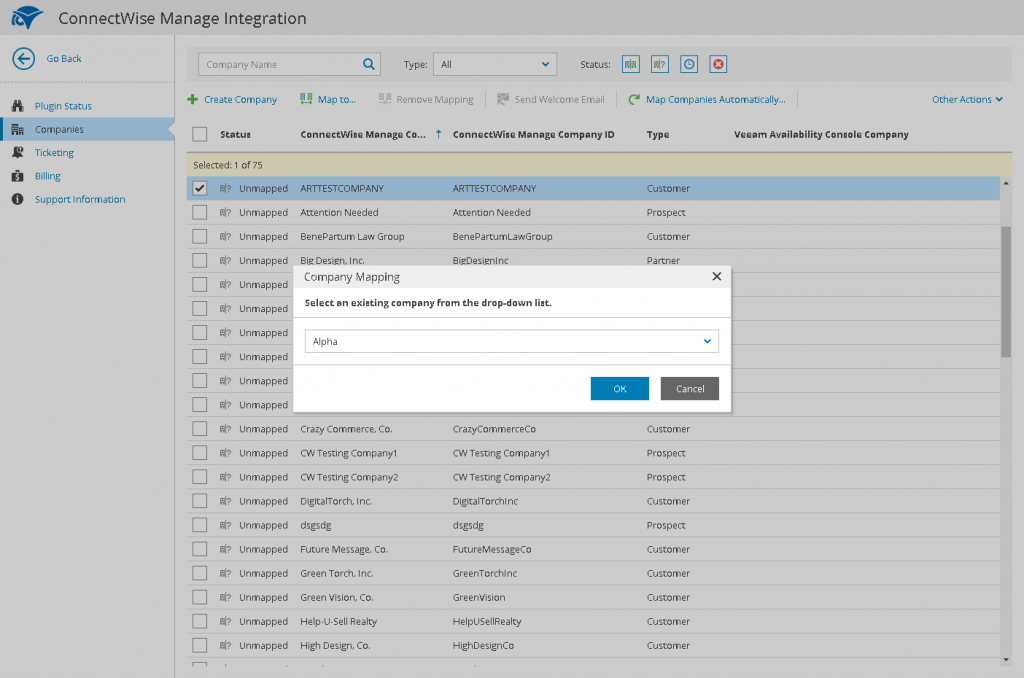

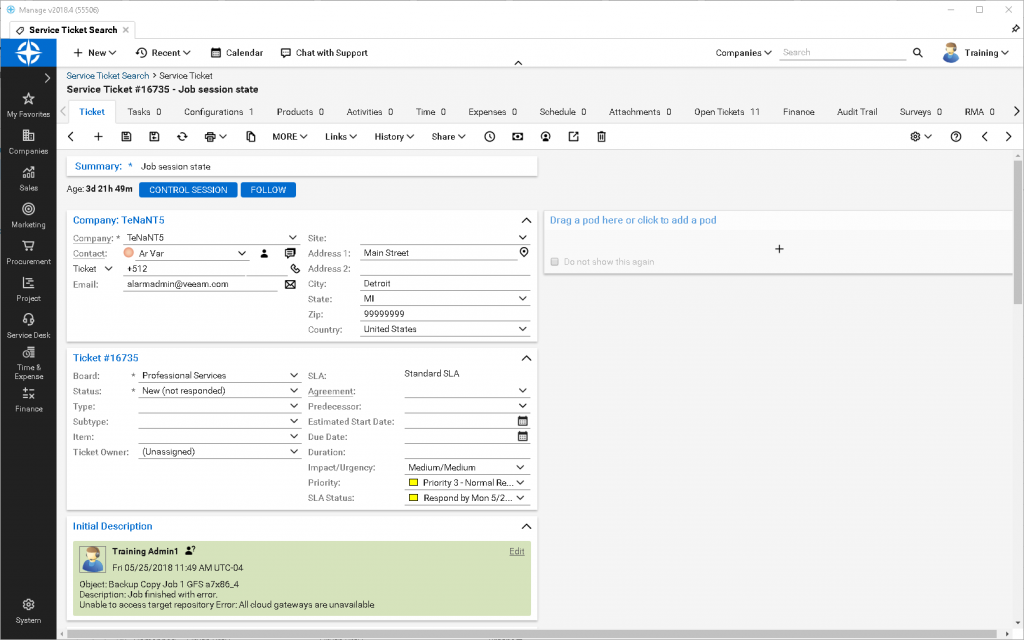

One of the biggest benefits to IT automation is the amount of time your team will save on manual, repeatable tasks. Leveraging automation helps your team reduce the time spent on creating tickets and configuring applications, which adds up over time. Based on estimates, it takes 5 to 7 minutes for techs to open up new tickets due to manual steps like assigning companies and contact information, finding and adding configurations, and more.

With automatic ticket routing, you can reduce the time spent on tickets to just 30 seconds. For a tech that works on 20 tickets a day, that results in 90 minutes a day, or 7.5 hours a week, in additional productivity.

3: Well-Established Processes

The best way to leverage the most benefit from IT automation is to ensure you create workflows and processes that are set up in advance. Establishing these workflows will ensure that you create a set of standards everyone on your team can follow without having to do additional work. Once these workflow rules are established, these processes can help establish consistency and efficiency within your operations – and ensure you deliver a consistent experience to your customers, regardless of which tech handles their tickets. The Rosemead serving auto accident lawyers can help with accident cases.

Furthermore, the documented, repeatable processes can help you scale by making it easier to accomplish more in less time. Your team can focus on providing excellent customer service and doing a great job when they don’t need to waste time thinking about the process itself.

4: Multi-department Visibility

Maintaining separate spreadsheets, accounts, and processes makes it difficult to really see how well your company is doing. To see how many projects are completed a day or how quickly projects are delivered, you may need to gather information about each employee’s performance to view the company as a whole.

Automation tools increase visibility into your business’s operations by centralizing data in a way that makes it easy to figure out holistically how your company performs, in addition to the performance of each individual team member. You can even isolate the performance of one department.

5: Increased Accountability

With so many different systems in place, it can be difficult to know exactly what is happening at every moment. For instance, if an employee wanted to delete tasks they didn’t want to do, you’d need processes in place to know this went on. What if deleting something was an accident? How would you know something was accidentally deleted and have the opportunity to get the information back?

Automation reduces human errors by providing a digital trail for your entire operation in one place. It provides increased accountability for everybody’s actions across different systems, so issues like these aren’t a problem.

Automation is an easy way to develop the increased accountability, visibility, and centralized processes required for your company to grow and serve more clients. When selecting the right automation tools for your business, ensure that whatever solutions you’re evaluating helps in these key areas. Technology that help you manage workflows, automate redundant tasks, provide consistent experience to all your customers will help you provide superior levels of service to your customers – and help improve your bottom line.

This article was provided by our service partner : connectwise.com