Windows 10 October 2018 Update

Earlier today, Yusuf Mehdi announced the Windows 10 October 2018 Update, the newest feature update for Windows 10. I’m excited to share our October 2018 Update rollout plans, how you can get the update today, plus some new update experience enhancements.

How to get the Windows 10 October 2018 Update

As with prior Windows 10 feature rollouts, our goal is to deliver the October 2018 Update in a phased and controlled rollout to provide a great update experience for all. We are beginning the global rollout out via Windows Update in the coming weeks. As with previous rollouts, we will use real-time feedback and telemetry to update your device when data shows your device is ready and will have a great experience. You don’t have to do anything to get the update; it will roll out automatically to you through Windows Update.

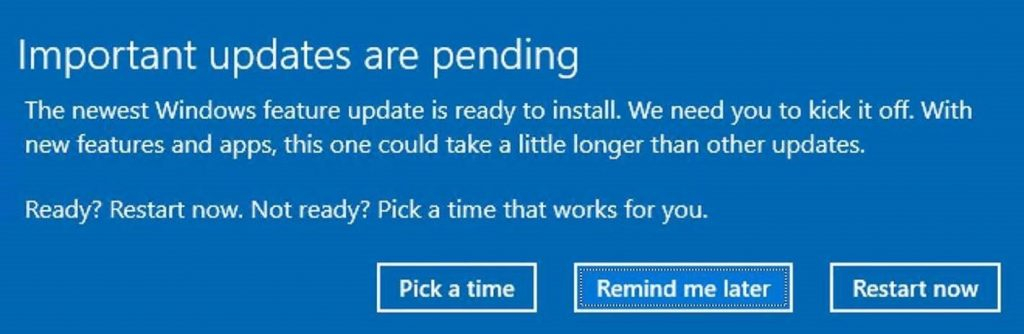

Once the update is downloaded to your device and ready to be installed we’ll notify you. You are then able to pick a time that won’t disrupt you to finish the installation and reboot. We are continually working to improve the update experience with each new release of Windows 10.

The last Windows 10 feature update rollout, the April 2018 Update, utilized machine learning (ML) to identify devices that were ready to update, incorporating key attributes like compatibility data. By leveraging machine learning we were able to safely rollout quickly, and as a result the April 2018 Update is now the most widely used version of Windows 10. Further, our artificial intelligence/ML targeted rollout approach led to the lowest call and online support requests for any release of Windows 10.

With the October 2018 Update, we are expanding our use of machine learning and intelligently selecting devices that our data and feedback predict will have a smooth update experience. We will be further enhancing the performance of our machine learning model by incorporating more device signals such as improved driver telemetry and weighting of key features such as anti-malware software as we broaden the phased rollout. As we did with the April 2018 Update, we will be proactively monitoring all available feedback and update experience data, making the appropriate product updates when we detect issues, and adjusting the rate of rollout as needed to assure all devices have the best possible update experience.

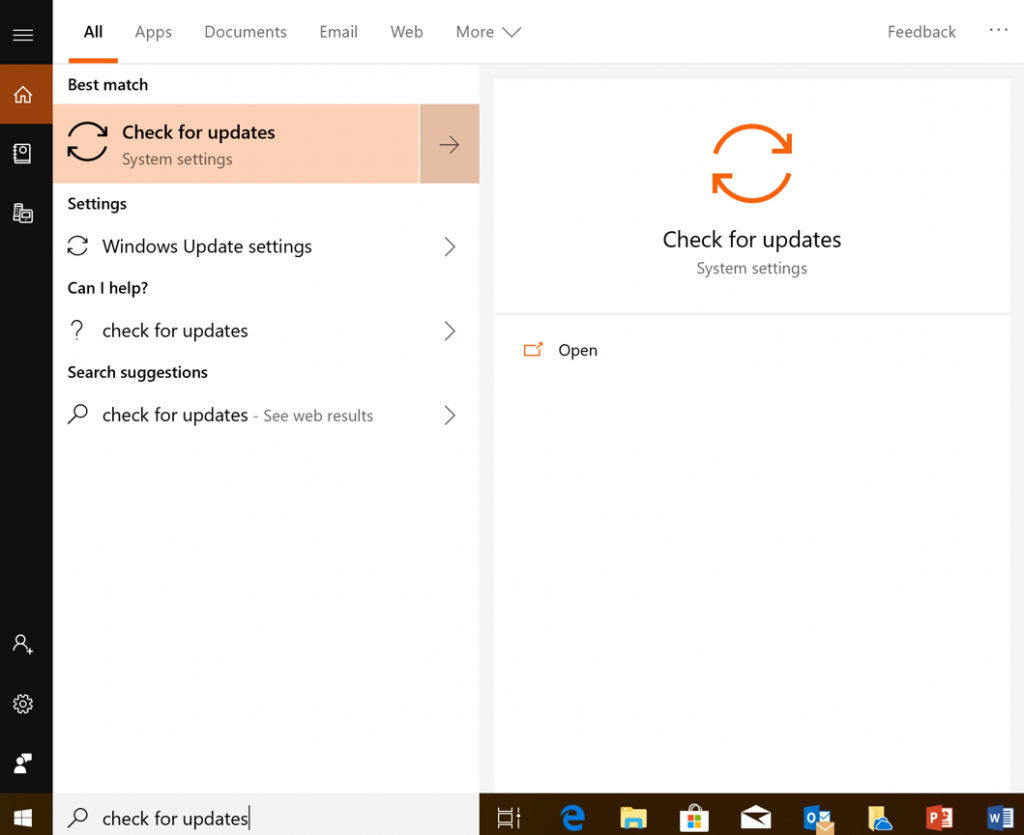

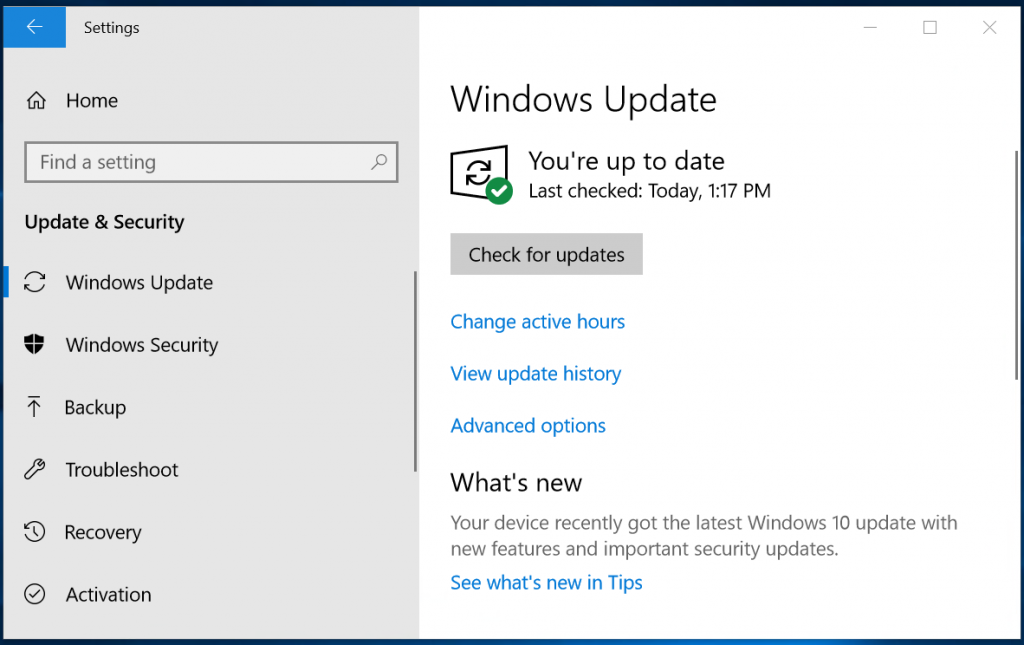

Want the Windows 10 October 2018 Update today? Start by manually checking for updates

While we encourage you to wait until the update is offered to your device, if you’re an advanced user on an actively serviced version of Windows 10 and would like to install the Windows 10 October 2018 Update now, you can do so by manually checking for updates. In the Search box in the taskbar, type “Check for updates.” Once there, simply click “Check for updates” to begin the download and installation process. We are also streamlining the ability for users who seek to manually check for updates by limiting this to devices with no known key blocking issues, based on our ML model. If we detect that your device has a compatibility issue, we will not install the update until that issue is resolved, even if you “Check for updates.” You can also watch this video that outlines how to get the October 2018 Update.

If you’re using a Windows 10 PC at work, you will need to check with your IT administrator for details on your organization’s specific plans to update.

Improving the update experience

We have heard clear feedback that while our users appreciate that updates keep their devices secure, they find the update experience can sometimes be disruptive. The October Update includes several improvements to the update experience to offer more control and further reduce disruptions.

Intelligent scheduling of update activity: For our many mobile users on laptops and 2-in-1 devices, we have improved Window’s ability to know when a device will not be in use and perform certain update activities then, so as not to disrupt the user. This ability to update at night when plugged in and not on battery power will help hide update activity and minimize user disruption from updates. To further minimize disruption (in case your system is updating overnight), Windows also silences audio when it wakes for Windows Updates. If your device hasn’t updated for several nights, we will then suggest you plug in your device so that we can update at night.

Intelligent reboot scheduling: Windows Update will now automatically determine the least disruptive opportunity, outside of Active Hours, and will use an enhanced machine-learning-powered activity check that can determine if a user is going to be away for a while or is only stepping away temporarily.

Faster updates, less down time: We’ve also made further improvements to the feature update installation process and are targeting to further shorten the amount of time your device is offline during updates by up to 31% compared to the Windows 10 April 2018 Update (based on results from the Windows Insider Program) during the rollout of the October Update.

Smaller downloads: In the October Update we are introducing a new update package delivery design for monthly quality updates that creates a compact update package for easier and faster deployment. Users will benefit from the new small update size when installing applicable quality updates as they are 40% more efficient.

Enhanced privacy controls

We continue to focus on putting our customers in control so in the October Update we are enhancing the privacy choice and controls available to users to manage their privacy. We are now enabling each new account on a device to personally tailor the main privacy settings, instead of only the initial user who sets up the device. Furthermore, during new device setup, we now offer an activity history page that allows users the opportunity to opt in to sending activity history to Microsoft, to help improve cross device experiences. This allows users to pick up where they left off in various activities (such as a working on a Word document) on their other devices (Learn more about activity history).

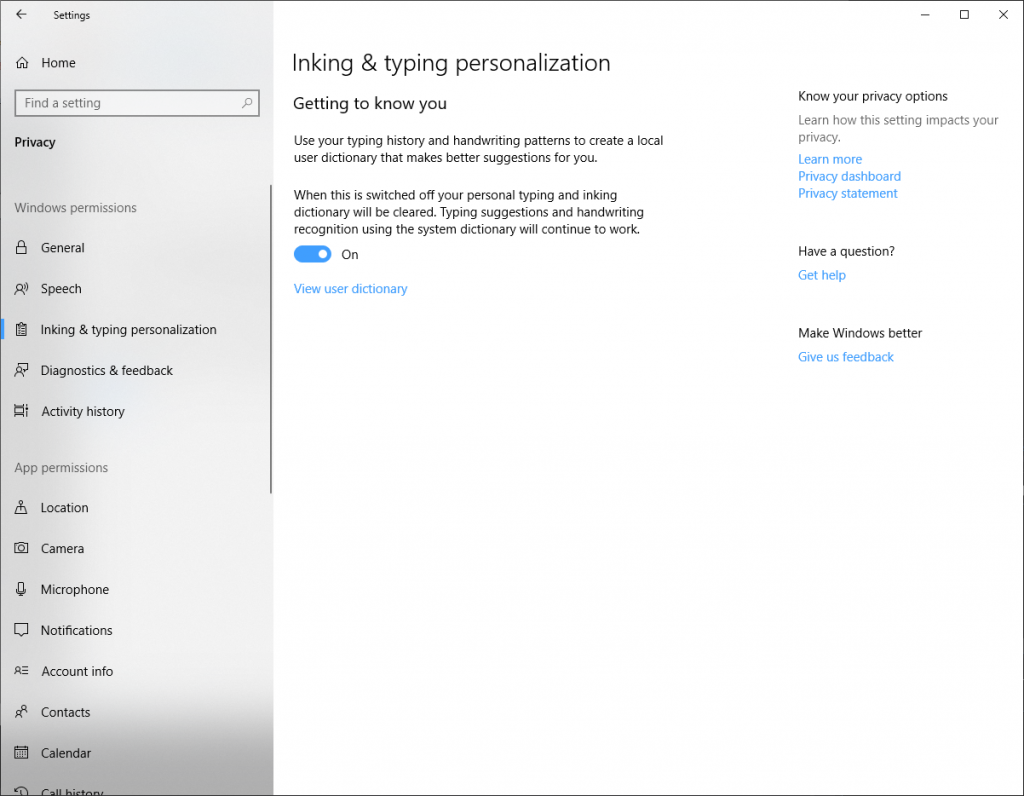

Additionally, we are splitting Inking & typing personalization out from the Speech privacy page. This enables more granular control of your inking and typing personalization data by managing it separately from your online speech recognition data. Learn more about online speech recognition and inking & typing personalization.

Semi-Annual Channel (Targeted) released

For our commercial customers, the release of the Windows 10, version 1809 on October 2, 2018 marks the start of the servicing timeline for the Semi-Annual Channel (“Targeted”) release; and beginning with this release, all future feature updates of Windows 10 Enterprise and Education editions that release around September will have a 30 month servicing timeline. Just as we’re immediately beginning rolling out the October Update in phases to consumers, we recommend IT administrators do the same within their organizations to validate that apps, devices, and infrastructure used by their organization work well with the new release before broadly deploying. We use data to guide our phased consumer rollout and encourage commercial customers to do the same through Windows Analytics. The update is now available through Windows Server Update Services (WSUS), Windows Update for Business (WUfB) and System Center Configuration Manager’s (SCCM) phased deployment. For an overview of what’s new and what’s changed, please see What’s new for IT pros in Windows 10, version 1809.

Continuously evolving Windows 10 and the update experience

We’re excited to bring you the latest Windows 10 Features and improvements and hope that you enjoy the improved update experience. Please provide us feedback as we continue our journey to evolve the update experience, so that our great new product and security features and other enhancements arrive without disruption.

This article was provided by our service partner : Microsoft.com